Highlight:

NVIDIA benefits from the overall ecological Network Effect: CUDA, installation foundation, system-level integration, optimization, etc. Each advantage complements and strengthens each other, forming a strong technical barrier.

NVIDIA has achieved unexpected revenue for six consecutive quarters in the past. Surprisingly, despite raising expectations every quarter, it still finds ways to surprise investors with a further significant lead. In the past, Internet Tech Giants considered growth more when deciding to use certain technologies to build business barriers. However, for AI giants, if they don't keep up, their business foundation may be lost. Today, their decision-making strategy is no longer based on calculating profits and returns, but on fear of absolute losses. Buying NVIDIA chips is essentially buying insurance, with no flexibility.

In the long run, tech giants will continue to seek high-performance GPU sources or internal solutions outside of NVIDIA to break free from their dependence on it. Most likely, these efforts will gradually weaken but cannot replace NVIDIA's dominant position in the AI field.

Introduction:

From time to time, a star company will emerge, attracting attention due to its skyrocketing stock price over the years, but also causing huge controversy due to intense fluctuations. This time it is NVIDIA, a global chip giant that powers AI. Its stock price has soared nearly 50 times in five years and is currently tied with Microsoft and Apple for the top three in global market value.

In a recent interview by a podcast named The Crossing, RockFlow founder Vakee expressed her opinion on whether Nvidia's stock price is in a bubble. She does not believe that Nvidia is in a bubble. A Forward P/E (Forward Price-To-Earnings Ratio) valuation of 40 times is a reasonable range for such a leading company.

The recent pullback of semiconductor stocks, including the overall pullback caused by excessive gains in the US stock market, is in line with normal market changes. In addition, the stronger expectation of interest rate cuts at that time strengthened risk appetite, and some funds flowed from top companies to more aggressive small-cap stocks, which is also a normal sector rotation. Furthermore, the strengthening of US export restrictions and geopolitical tensions a few weeks ago were also inducements for the decline of chip stocks represented by NVIDIA, but these are all normal market reactions.

As for the violent fluctuations in the US stock market in the past one or two weeks, it is mainly due to a combination of factors such as weak US labor market data, the lack of surprises from financial report season giants, the pullback of yen carry trading, and potential conflicts in the Middle East. However, setting aside these external factors, as far as the target of NVIDIA is concerned, we believe that with the long-term optimism towards AI (which is likely to be the biggest opportunity for change in our generation), the absolute leading stock in this industry still does not have a bubble.

NVIDIA's performance in the past year has been impressive: it has a market share of about 90% in the AI chip market, annual revenue exceeding $60 billion, and a Net Profit ratio exceeding 50%. In the past five years, its compound annual revenue growth rate has reached as high as 64%, far ahead of many S & P 500 companies.

Regarding the development history and investment value of this company, RockFlow's research team made a detailed analysis in an article at the beginning of last year - https://mp.weixin.qq.com/s/6HD8UjKIH6c-BJx3bgERiw. In this article, we hope to answer why the market has not yet paid enough attention to NVIDIA's true Competitive Edge, and why we believe that NVIDIA is not only a great company, but also an investment target with high potential returns.

RockFlow will continue to track the follow-up development and latest market trends of high-quality US stock companies such as biopharmaceuticals and AI. If you want to learn more about the development overview, investment value, and risk factors of related companies, you can check out RockFlow's previous in-depth analysis articles.

- Chip king TSMC, where will it go after a trillion-dollar market value?

- AI is hot, but not yet a "bubble"

- When will C3.AI return to the uptrend?

- After NVIDIA, who will be the next early AI beneficiary?

1. What does NVIDIA Competitive Edge rely on besides CUDA?

Investors who are bullish on Nvidia believe that they are betting on its future. After considering Nvidia's higher expected growth, it is not more expensive than other tech giants.

However, there is a problem: the farther the market predicts returns, the more uncertain their predictions become. Microsoft and Apple are mature companies that rely on existing stable customer bases to make money. Nvidia, on the other hand, serves a newer but more promising market, so investors are much more divided about Nvidia's prospects than Microsoft and Apple.

For a long time, NVIDIA has been considered a top producer of gaming graphics cards. With the rise of cryptocurrency mining, GPUs, which serve as the core of graphics cards, have become increasingly popular. NVIDIA's GPUs are highly optimized for "parallel processing" - decomposing computationally difficult problems and assigning them to thousands of processor cores on the GPU at the same time, thus solving problems faster and more efficiently than traditional computing methods.

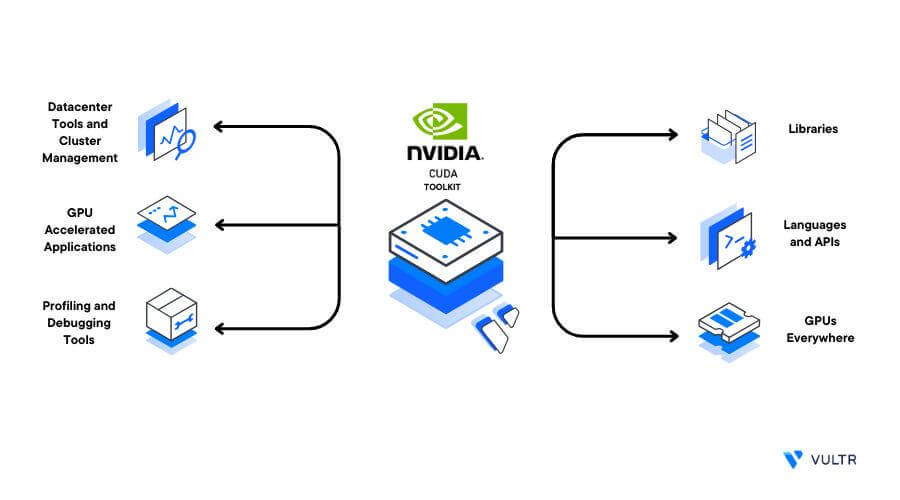

In addition to designing the most advanced GPUs on the market, NVIDIA has also created a programming model and parallel computing platform - Computing Unified Device Architecture (CUDA), which has become an industry standard and makes it easier for developers to use the features of NVIDIA GPUs.

But what NVIDIA relies on is not just the CUDA platform that everyone talks about today. The RockFlow research team believes that NVIDIA benefits from the overall ecological Network Effect: CUDA, installation foundation, system-level integration, optimization, etc. It also actively chooses advanced solutions in bandwidth and network to improve computing performance. Each advantage complements and strengthens each other, forming a strong technical barrier.

First, let's look at the installation base. CUDA's decades-long leading advantage means that it benefits from a strong Network Effect - its huge installation base motivates frameworks and developers to target it, thereby attracting more users to adopt it. NVIDIA has a large number of users in fields such as gaming, professional visualization, and data center. The huge user base provides NVIDIA with a continuous source of revenue and product feedback, and this scale effect also enables NVIDIA to continue investing in research and development and maintain technological leadership.

Secondly, it is the system-level integration capability. NVIDIA not only provides GPU hardware, but also provides supporting software stacks. It can be said that from drivers to power builders, and then to optimization libraries, it has already formed a complete ecosystem. This vertical integration enables NVIDIA to optimize at the system level, providing better performance and User Experience.

As for the optimization level, NVIDIA has conducted deep optimization in both hardware and software. In terms of hardware, it continuously improves the GPU architecture to enhance performance and energy efficiency; in terms of software, it fully unleashes the potential of hardware through driver and library optimization. System-level optimization, such as multi-GPU collaborative work and GPU direct memory access technologies, further improves overall performance.

In order to solve bandwidth and network problems, NVIDIA has made many attempts. It has launched a series of technologies to improve the efficiency of data transmission between GPUs, CPUs, and GPUs, the most important of which is NVLink. NVLink directly connects multiple GPUs with high bandwidth, significantly improving AI computing efficiency. This has enabled NVIDIA to maintain a strong position in the fields of autonomous driving and AI computing. In addition, betting on InfiniBand, acquiring Mellanox, and approaching the Ethernet platform through NVIDIA Spectrum-X are also NVIDIA's active layout in the network field.

The RockFlow research team believes that for today's NVIDIA, the huge installation base provides the driving force and data for its continuous optimization of GPU design, while system-level integration and optimization enhance user stickiness and expand the installation base. Coupled with the continuous iteration of many advanced solutions for bandwidth and network issues, the virtuous cycle between them keeps NVIDIA in a leading position in the GPU and accelerated computing fields.

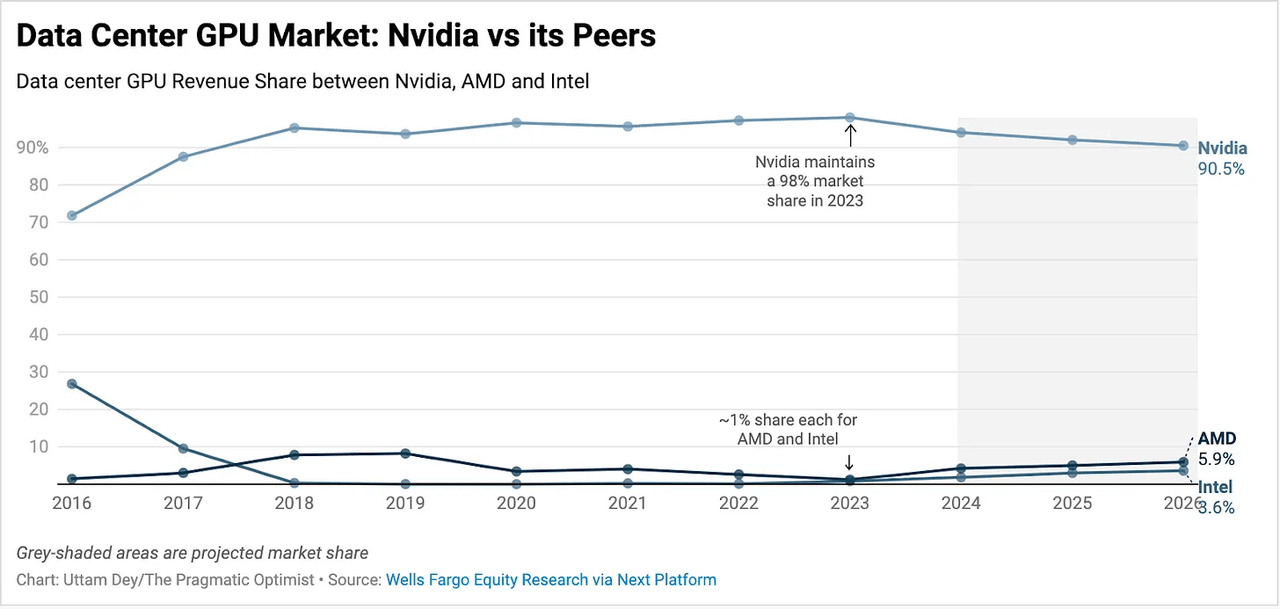

Therefore, even though competitors AMD and Intel have launched similar GPU products with price advantages, NVIDIA still holds an absolute dominant position in the AI chip market.

It is estimated that NVIDIA has firmly occupied more than 90% of the data center GPU market share in the past 7 years. In 2023, its share will reach 98%. The operation of all large data centers and large model training rely on GPUs developed by NVIDIA.

In the long run, it may be difficult for NVIDIA to fully maintain its current market share, but with the continuous growth of the data center GPU and other AI chip markets, its Competitive Edge will ensure that it receives the vast majority of orders. NVIDIA is expected to always occupy an important position in this new industrial revolution and maintain benign and healthy long-term growth.

2. NVIDIA's performance miracle: expectations are repeatedly raised, but still bring surprises

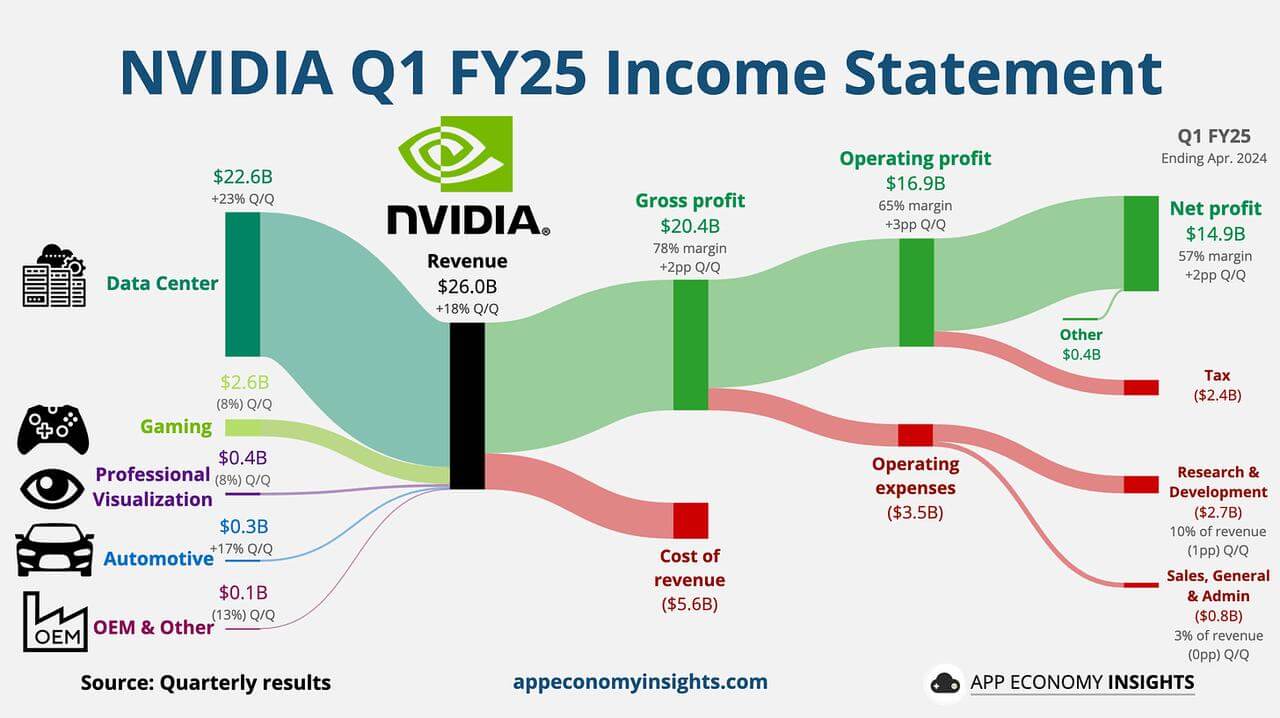

More than two months ago, NVIDIA announced its Quarter 1 performance, and its stock price soared 10% the next day. Since then, its market value has skyrocketed, once replacing Microsoft as the world's most valuable company.

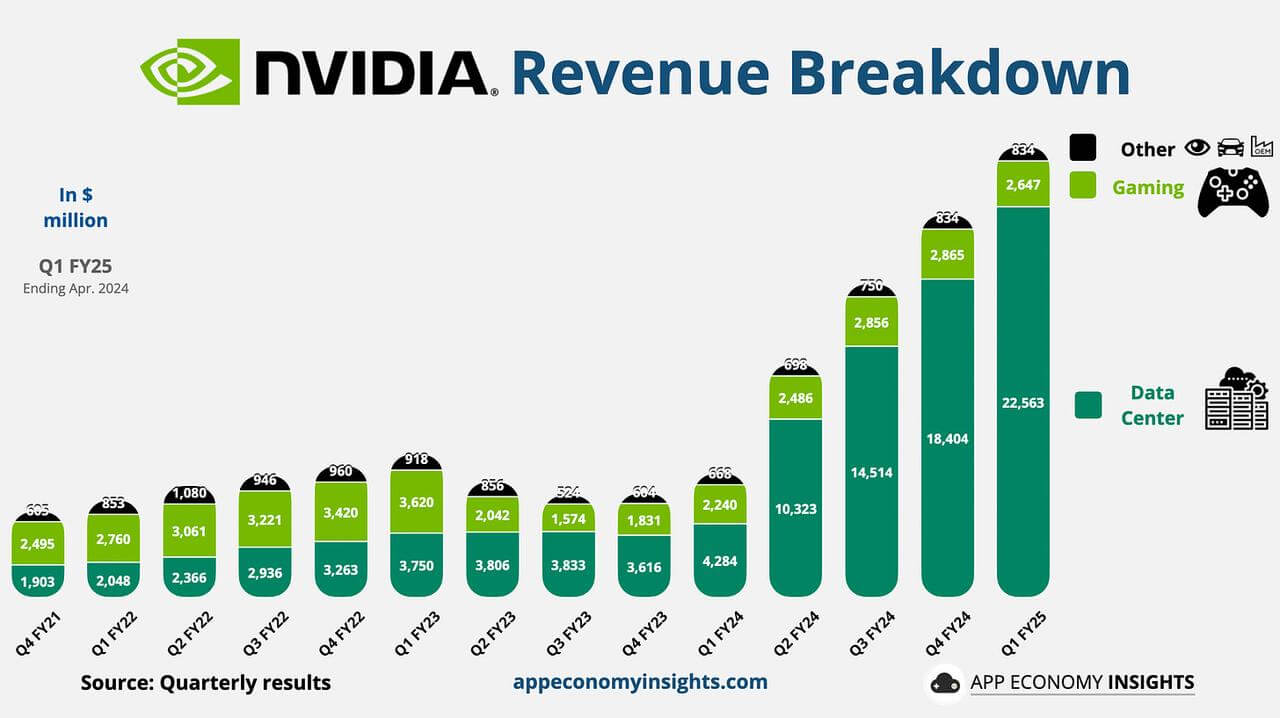

Last quarter, NVIDIA's specific revenue situation is as follows:

Revenue grew 18% quarter-over-quarter to $26 billion (exceeding expectations by $1.50 billion). Data center revenue grew 23% quarter-over-quarter to $22.60 billion, making it the largest and fastest-growing segment.

How exaggerated is its growth rate? It can be seen from the following chart:

In fact, NVIDIA has achieved higher-than-expected revenue for six consecutive quarters in the past. Surprisingly, despite raising expectations every quarter, it can still find ways to further surprise investors with a significant lead. At the same time, the profit margin of NVIDIA's chip business is also significantly improving.

At the subsequent financial report meeting, Huang Renxun stated:

The next industrial revolution has already begun. Major companies and countries are collaborating with NVIDIA to turn traditional data centers worth trillions of dollars into accelerated computing and establish new types of data centers - AI factories to produce a new commodity - AI.

From the perspective of the two core stages of AI system operation: training - AI learns from large amounts of data, develops intelligence and pattern recognition. Currently, NVIDIA's powerful GPU dominates this stage; inference - AI applies its knowledge to real-world tasks and decisions. Despite facing more intense competition, NVIDIA is making significant progress.

Inference workloads contributed about 40% of NVIDIA's data center revenue in the past year. The current market consensus is that with more and more generative AI applications emerging, inference is expected to become a huge market, bringing considerable investment returns to NVIDIA customers.

Currently, NVIDIA mainly divides customers into three categories. Cloud as a Service Provider (CSP) contributes nearly half of Data center revenue. All giants (Amazon, Microsoft, Google, etc.) are customers of NVIDIA. B-end enterprises have driven strong continuous growth. Taking Tesla as an example, it expanded its training AI cluster to 35,000 H100 GPUs and used it for FSD V12. C-end companies are also a key vertical class. Taking Meta as an example, its Llama 3 is trained on 24,000 H100 GPU clusters and is expected to use 240,000 GPUs and ten times the computing power of Llama 3 to train the next generation MultiModal Machine Learning Llama 4.

NVIDIA management also pointed out some important directions during the conference call.

Regarding Data Center, they believe that "as generative AI enters more and more 2C internet applications, we expect to see continued growth opportunities, as the scale of inference will expand with the complexity of the model and the increase in the number of users and queries per user, thereby driving more demand for AI computing."

In addition, NVIDIA is very optimistic about the concept of "sovereign AI": "Sovereign AI refers to a country's ability to produce AI using its own infrastructure, data, labor, and commercial networks. The importance of AI has attracted the attention of every country, and major powers will pay more attention to the control of AI technology. We believe that sovereign AI will bring billions of dollars in revenue this year."

Regarding the impact of US export restrictions, the new generation H200, and Blackwell architecture, Huang Renxun has also made corresponding disclosures. The consecutive rise in NVIDIA's stock price in the following weeks fully demonstrates the market's recognition of its views and optimism about the long-term development of the AI wave.

3. The biggest challenge is still self-developed by giants

As mentioned earlier, NVIDIA's Network Effect built around the CUDA ecosystem is an excellent example of a commercial company shaping a complete ecosystem. NVIDIA's huge success is not only due to the excellent performance of its chips, but also because it has firmly established itself at the center of the generative AI wave with the entire hardware and software network layout.

However, at the same time, its strategy of bundling basic software with chips has also attracted strong criticism from customers and competitors, and even regulatory agencies are repeatedly launching Anti-Trust investigations due to its high market share.

Currently, NVIDIA is facing competition from AMD and other chip manufacturers (including Qualcomm, Intel, etc.). These companies are essentially chip designers, and they almost all use the same outsourcing company to manufacture chips - TSMC.

AMD and Intel have both launched their own Data Center GPUs, aiming to regain market share from Nvidia's H100/H200. Intel has launched the Gaudi3 AI acceleration chip, while AMD has launched the MI300 series. In 2024, it may be the moment when Nvidia hands over a small part of the market share to AMD for the first time, and Intel is also expected to regain a small part of the market share.

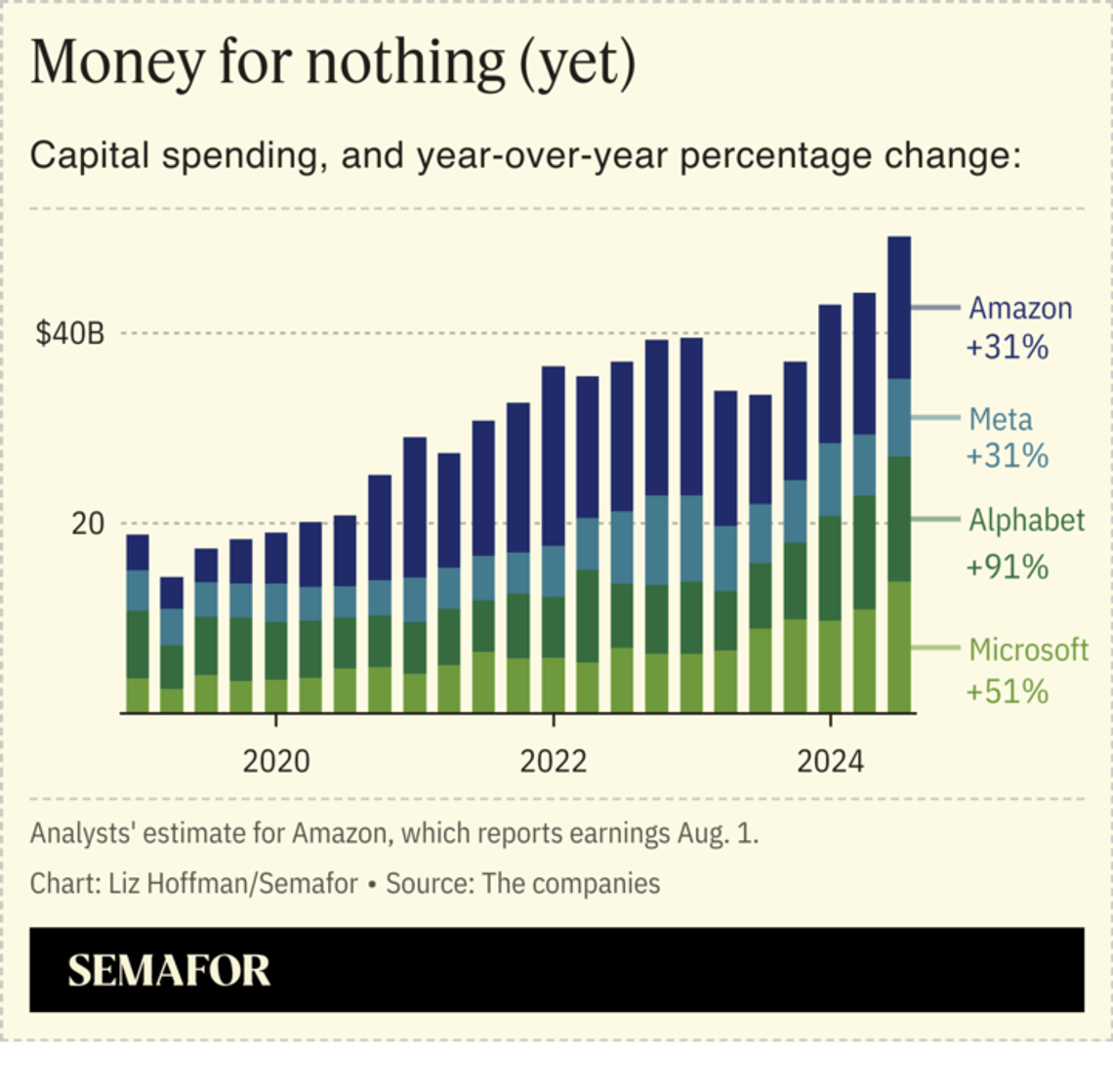

Just look at one statistic to understand why competitors are so determined: According to the latest quarterly financial reports, Microsoft, Google, and Meta's total capital expenditures for the second quarter of 2024 exceeded $40 billion - most of which was spent on AI.

With so many giants choosing to invest heavily, is it possible that their decision-makers will one day be forced by internal and external pressures to calculate the ROI of AI costs? They find it not cost-effective, so they are starting to slow down AI investment?

Our answer is almost impossible. In the past, when Internet Tech Giants decided to use certain technologies and enhance certain capabilities to build business barriers, they considered more about growth - whether it could accelerate business development. But this time, generative AI has a big change. For these giants, if they don't keep up, their companies and business foundations may be lost. Therefore, the arms race of generative AI this time is essentially more like buying "medicine" logic for the procurement of NVIDIA chips. When a technology is the difference between life and death for a company, it will not think too much about the details.

This is not an exaggeration. For example, the search business may have been completely changed by AI. Whether it's Google or Baidu, their decision-making strategy today is no longer based on calculating profits and returns, but on fearing absolute losses. Buying NVIDIA chips is essentially buying insurance, and there is no flexibility at this point.

It is precisely because high-performance chips are so important that while giants spend huge capital expenditures, they are also determined to develop their own or find alternative solutions. Faced with NVIDIA's comprehensive CUDA ecosystem layout, other companies (in fact, almost all competitors) are trying to jointly develop open solutions to break NVIDIA's monopoly on AI software and hardware ecology.

Intel, Google, ARM, Qualcomm, Samsung, and other technology companies are collaborating to develop a new software suite that allows developers' code to run on any machine equipped with any chip. OpenAI is also working hard, releasing an open-source language that allows researchers without CUDA experience to write GPU code, and the open-source PyTorch Foundation incubated by Meta is also making similar attempts.

These companies are also striving to replace NVIDIA's proprietary hardware, including developing new solutions to connect multiple AI chips within and across servers. Companies such as Intel, Microsoft, Meta, AMD, and Broadcom hope to establish new industry standards for this crucial connectivity technology for modern data centers. The conflict between proprietary and open solutions is a bit like Apple and Google Android in the smartphone market, and as we have seen, both closed and open visions are enough to create winners.

In the long run, tech giants will continue to seek high-performance GPU sources or internal solutions outside of NVIDIA to break free from their dependence on NVIDIA. Most likely, these efforts will gradually weaken but cannot replace NVIDIA's dominant position in the AI field.

Conclusion

In the past few years, NVIDIA's chips have pushed the company's profitability to new heights. The RockFlow research team has long been optimistic about NVIDIA. Previously, RockFlow founder Vakee was asked on the podcast The Crossing, "Are there any signals that NVIDIA may be attacked by competitors?" Her opinion was that there are no such signals.

NVIDIA's advantage today is not just a single product or the hardware advantage of a simple GPU. NVIDIA has built its core competitiveness in a complex ecosystem, so it is particularly difficult to break through. Its advantages include not only the CUDA platform, but also the current user base, the entire basic installation volume, the overall system integration capability, and the ability to continuously optimize. These advantages reinforce each other, so the overall competitive barrier will always be prominent.

Faced with potential problems in the ecosystem, NVIDIA can spare no effort to solve them, whether through acquisitions or investments. This continuous investment is constantly increasing its own barriers in hardware, system integration capabilities, or internet bandwidth issues. This virtuous cycle will make its advantageous position more stable.

Therefore, we believe it can still replicate its previous growth momentum and release greater value in the long run.

Author Profile:

The RockFlow research team has a long-term focus on high-quality companies in the US stock market, emerging markets such as Latin America and Southeast Asia, and high potential industries such as encryption and biotechnology. The core members of the team come from top technology companies and financial institutions such as Facebook, Baidu, ByteDance, Huawei, Goldman Sachs, CITIC Securities, etc. Most of them graduated from top universities such as Massachusetts Institute of Technology, University of California, Berkeley, Nanyang Technological Institute, Tsinghua University, and Fudan University.

Additionally, you can also find us on these platforms: